The world just got one step closer to the bleak worlds depicted in Black Mirror as one woman detailed how one AI video stole her likeness.

Oh great, new horrors to be wary of as artificial intelligence videos get better and better.

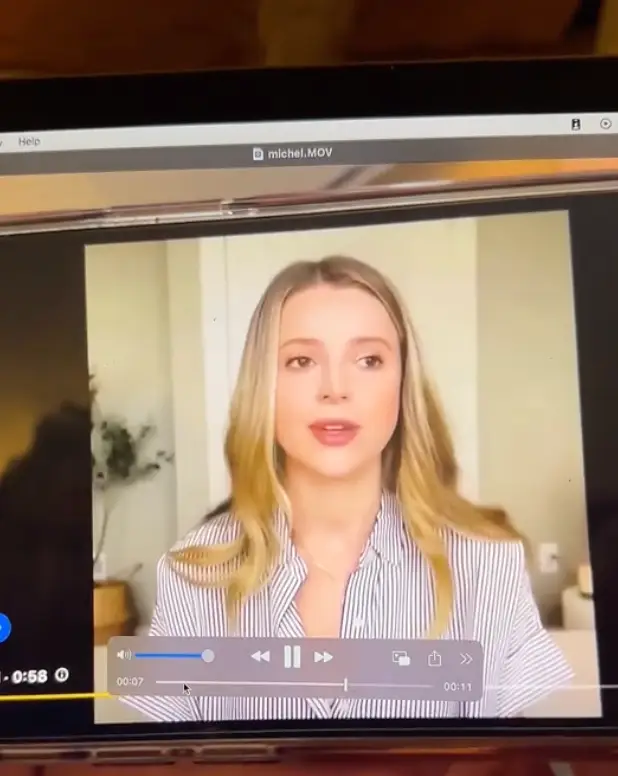

TikToker Michel Janse explained to her followers in March that her likeness and voice had been used to promote a brands erectile dysfunction pill.

Michel suggested AI technology had been used to create the deepfake video in order to make it convincing.

Advert

On top of this already eerie revelation, Michel found out about this after coming back from her honeymoon.

Speaking to her followers on her page, @michel.c.janse, she explained just how disgusted she was at the actions of the company.

“The thing that feels most violating about this is that they pulled footage from by far the most vulnerable video I had ever posted on my channel,” she said in her video that has been viewed over 1.2 million times.

“I was sitting in my bedroom explaining very traumatic and difficult things that I had gone through in the years prior.”

She explained the video showed her in the bedroom in her old apartment, wearing her clothes, talking about their pill and discussing the sexual difficulties her partner was having.

Michel reaffirmed it wasn’t her voice and said while she wasn’t happy showing the actual ad, it was important to do so people see how much AI is improving.

And to be fair, it looks very much like her and sounds just as convincing.

After picking up their jaws from the floors, social media users advised the TikToker to consider legal action to prevent this becoming the norm in the future.

“Get a lawyer, set a precedent that establishes protections for everyone else in the future. I’m terribly sorry this happened to you,” one user wrote.

"This is a huge lawsuit - make some calls, find a good lawyer, you will win and it will bring attn to this issue. I am so sorry this happened to you!” another wrote

“For your sake and for OUR sake, please please sue. People need to be holding companies accountable for this before they get even more comfortable,” a third wrote.

Others said they would be more wary about putting their likeness on the internet as deepfakes become more believable. I don’t blame them to be honest.

Topics: Artificial Intelligence, Social Media, TikTok, Technology