While there are many perks to artificial intelligence (AI), it can be used for the wrong reasons sometimes.

AI can be used in so many ways: from being personal assistants and shoppers, to summarizing long-form articles and creating formulas.

But it can be used for things that can cause a lot of issues, deepfakes being one.

Advert

Famous faces such as Megan Thee Stallion and Taylor Swift have been victims of x-rated deepfakes being made, and now fake content of supposed historical earthquakes is being created.

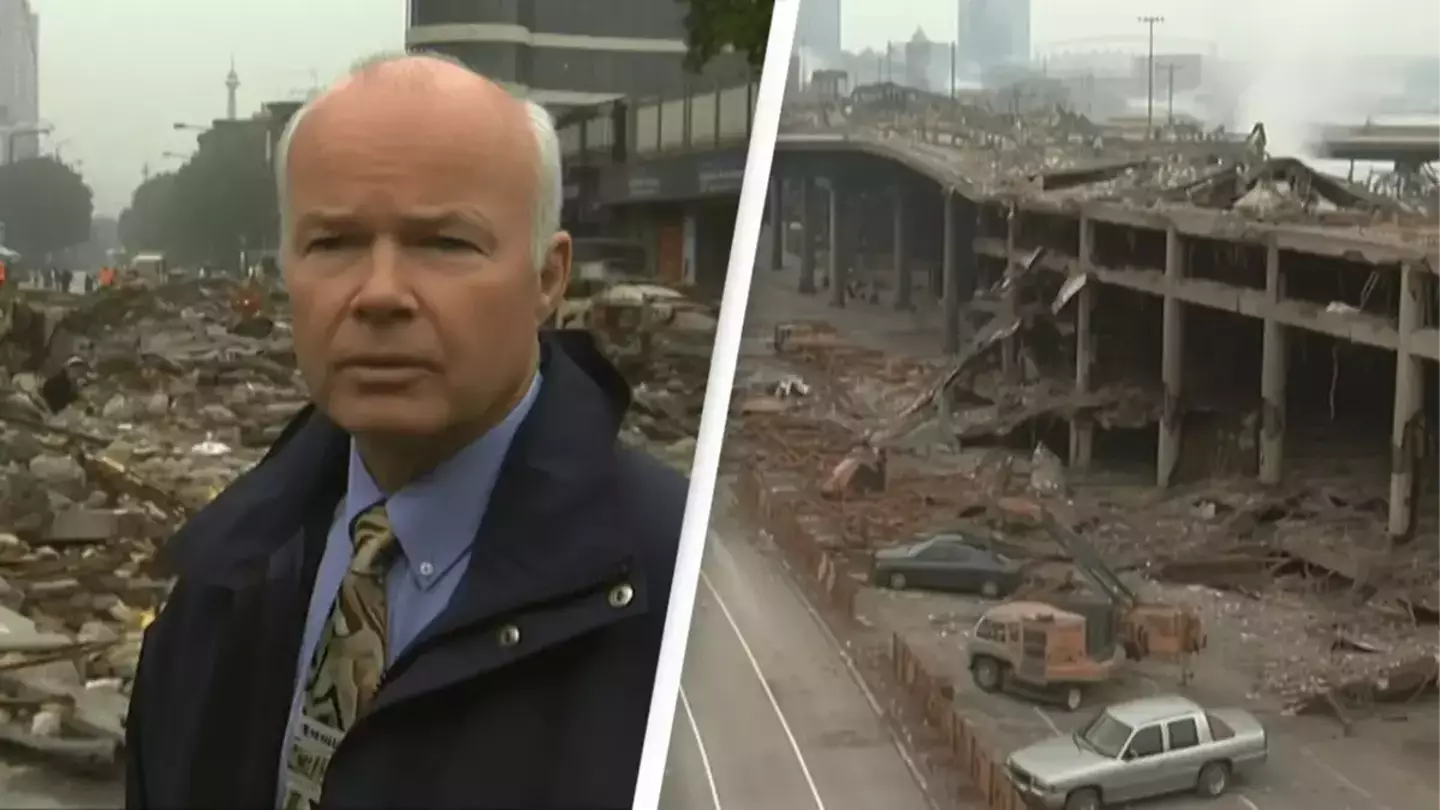

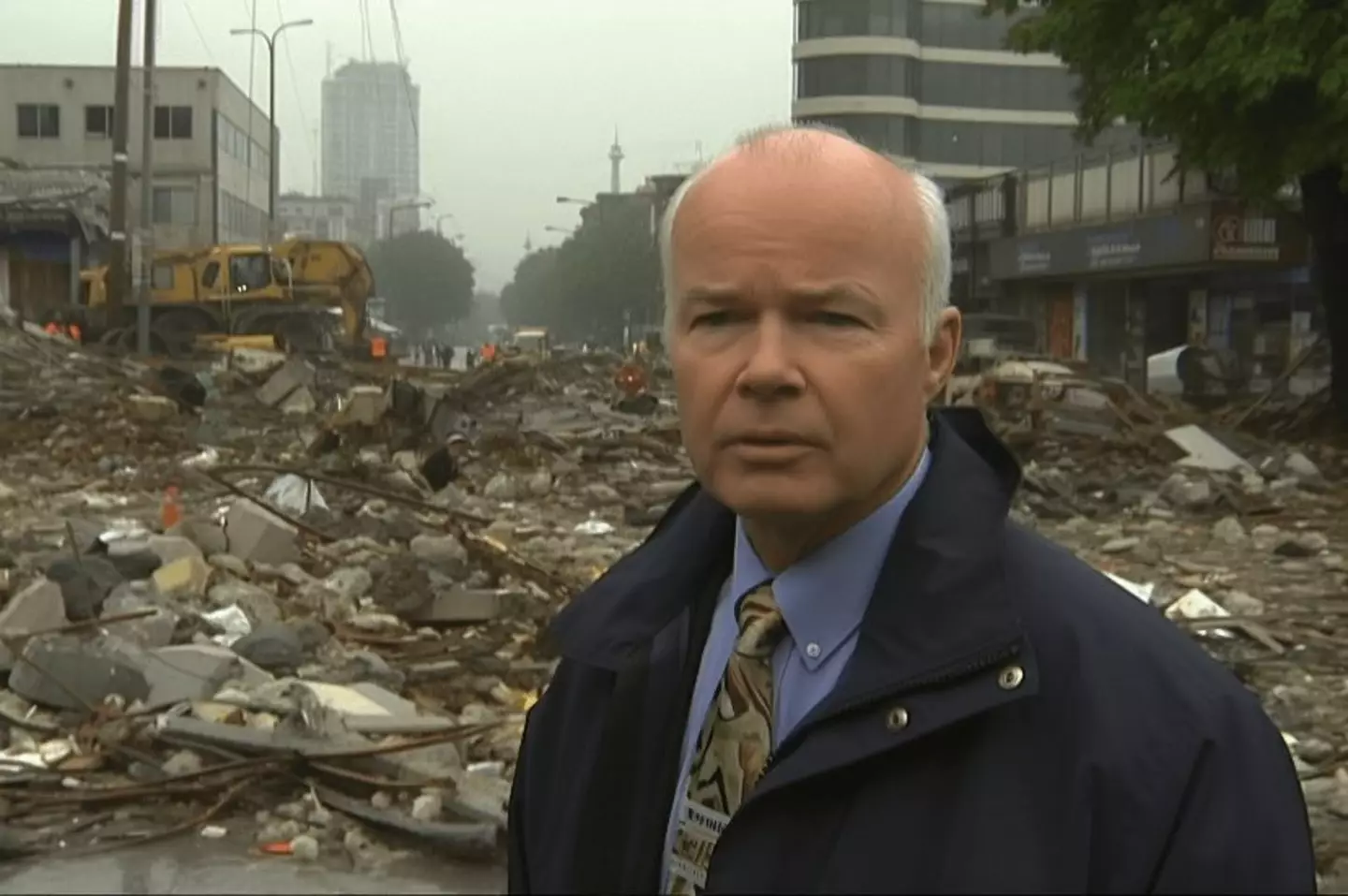

The photos in question were first posted to the 'Midjourney' sub-Reddit last year. The series of twenty different shots were titled: "The 2001 Great Cascadia 9.1 Earthquake & Tsunami - Pacific Coast of US/Canada."

Photos included scenes of rubble, collapsed bridges, worried families, news reporters and even former President George W Bush amid all the destruction.

Advert

Totally realistic - pixels and all - many were shocked to find out that the photos were completely fake.

Dozens of people have since flocked to the post's comment section to share their reactions to the AI con.

Some found the whole situation totally bizarre.

"Was I the only one who was like 'How come I don't remember this happening?' until seeing the subreddit?" one person admitted.

Advert

A second replied: "Me too dude. I thought was I living under a rock or something that I didn’t hear about this."

"This is what insanity is made of," claimed a third.

Others, however, took the opportunity to highlight the issues with 'misinformation'.

Advert

Someone pointed out: "f people today are already easily misled by social media posts containing nothing but text - what will image and video of perfect quality do? Do you think they'll check the source?"

"We already don't know what parts of history are real. I think now we have a real difficult time with misinformation," added another.

The concerning thread eventually made its way to X, with software expert Justine Moore taking the time to debunk the fictitious photos.

Advert

She posted that 'something wild' was currently going round on the platform.

"People are telling stories and sharing photos of historic events," the tweet continued.

But, she explained to her followers, the evidence was far from authentic, as the decades-old event never actually happened.

Some weren't so easily fooled by the AI-constructed images with one X user stating: "It's pretty obvious since none of these look like real people even slightly."

Advert

Justine then replied: "You don’t think these look real?? With the exception of the kid’s hand that has 7 fingers, it all looks pretty real to me…"

Topics: Technology, Social Media, Artificial Intelligence, Reddit